When Does Monitoring Change How LLMs Think?

A short investigation into whether models compute differently when they believe they are being watched

This project began with a simple question. Do reasoning models compute differently when they believe someone is watching their chain of thought. The idea felt intuitive. Humans change their reasoning under observation. It seemed plausible that language models might show a related effect, especially since monitoring cues appear frequently in oversight pipelines.

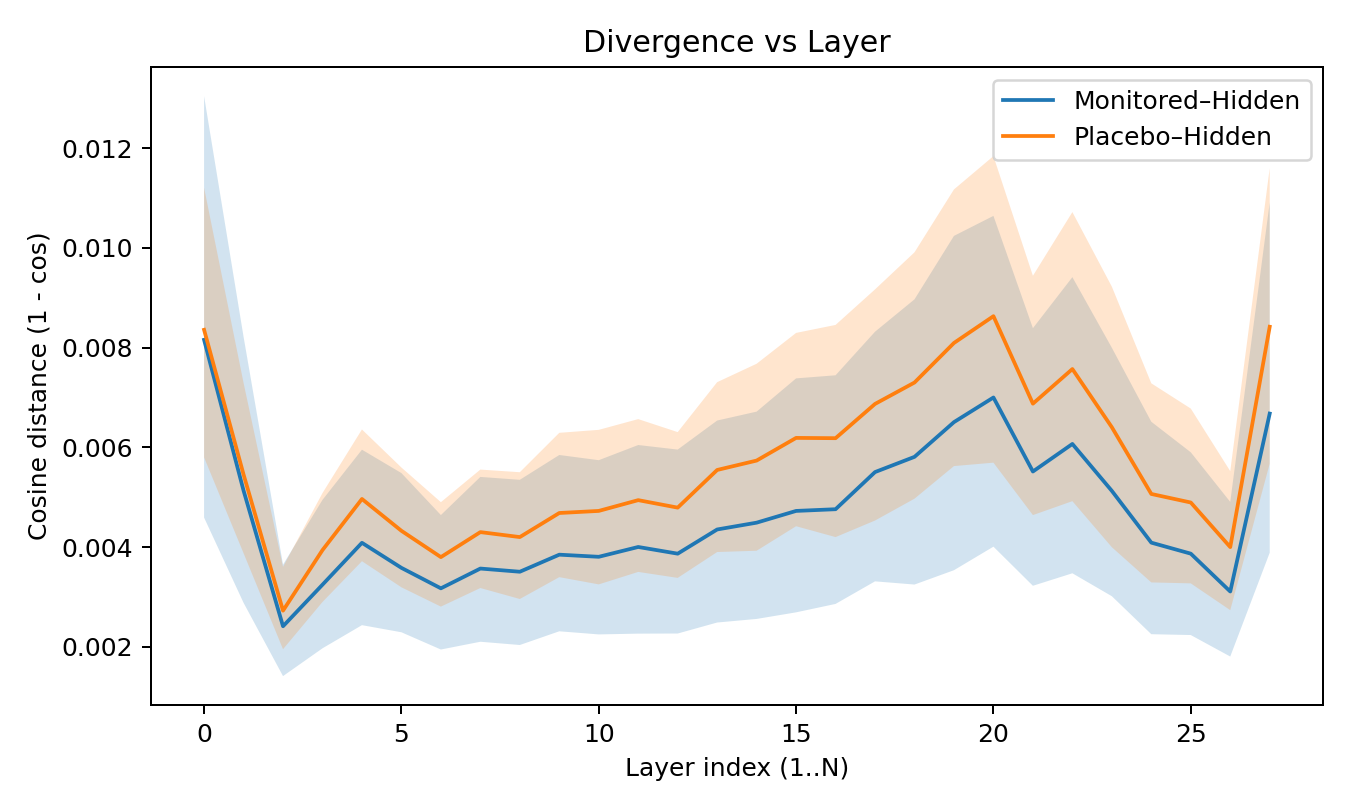

I wanted to test this in a controlled setting. The goal was not to study behavior directly, but to examine the internal states that emerge during reasoning and to ask whether the presence of a monitoring cue changes those internal states in a measurable and causally meaningful way. All experiments were done on DeepSeek-R1-Distill-Qwen 1.5B across math, code, and factual question answering tasks, using hidden, monitored, and placebo prompt conditions.

Summary of the Main Finding

Monitoring causes an immediate and persistent shift in the early activation paths of the model. The shift appears right after the cue token is processed, continues across subsequent tokens, and is primarily encoded in the early attention stack. Early layer activations play a causal role in shaping the reasoning trajectory, while later layers contribute very little. This effect is consistent across domains and is not explained by prompt length or salience.

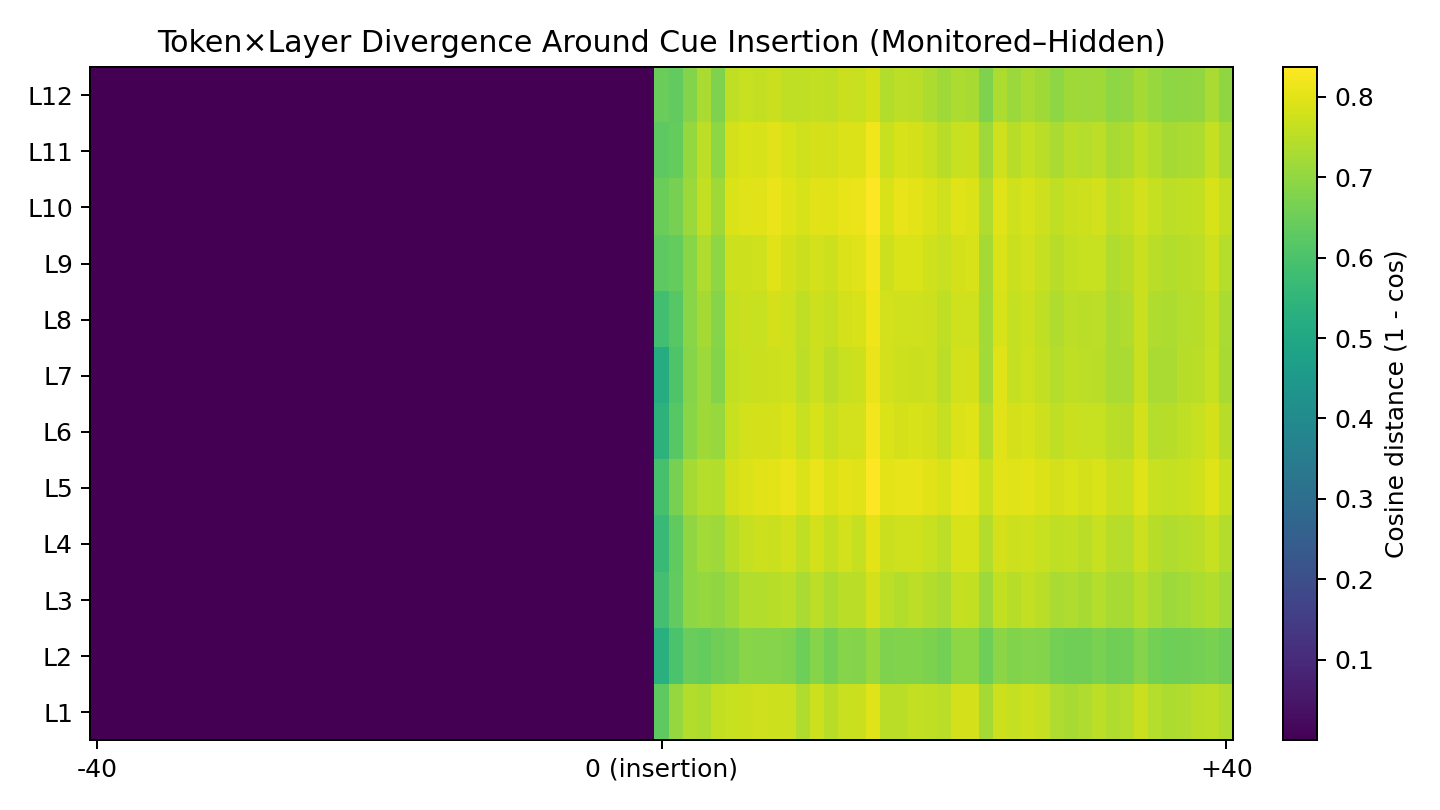

Experiment 1: Where the Effect Begins

I tested cue placement at different positions in the prompt. The divergence heatmap shows a sharp rise at the exact token where the cue appears and the effect continues to accumulate across layers zero to twelve. This pattern is visible across all domains and confirms that the model reacts to the cue as soon as it reads it.

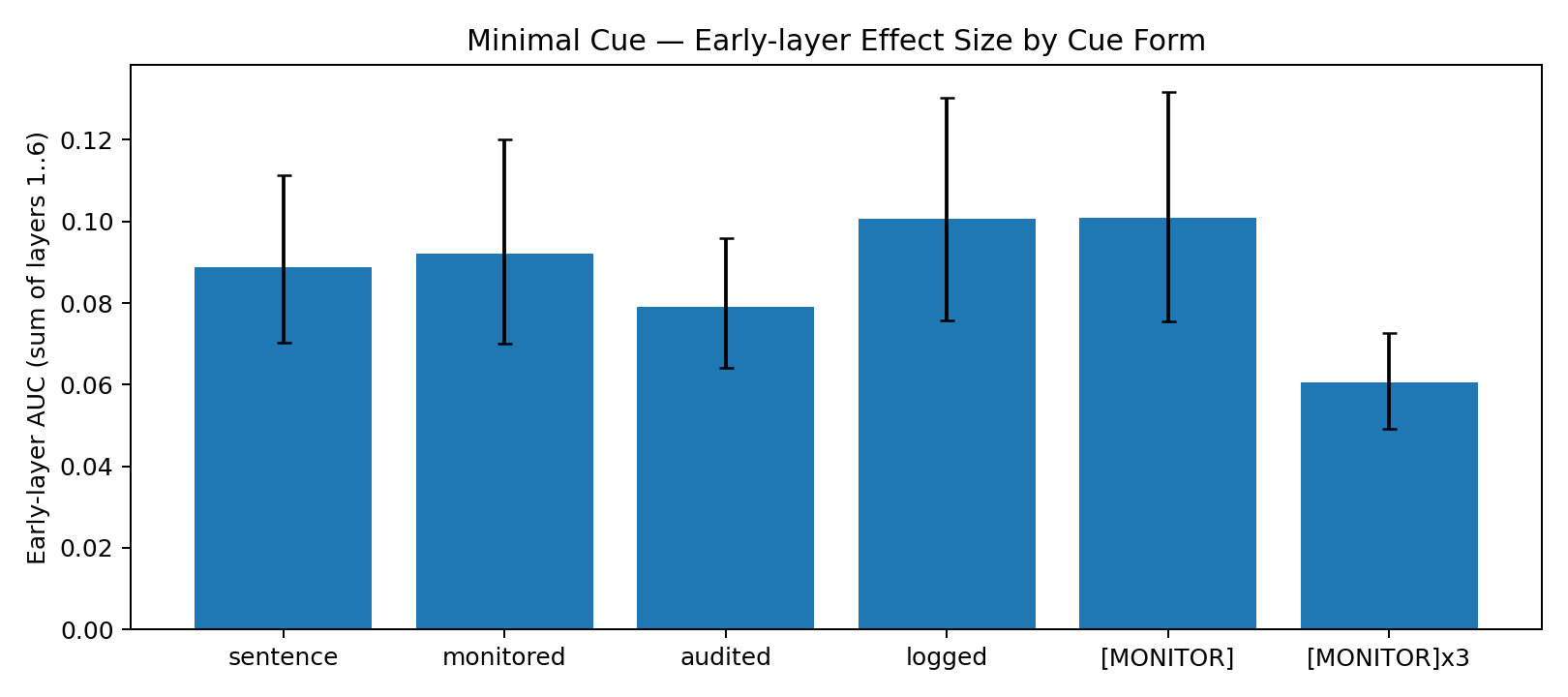

Experiment 2: How Much Cue Information Is Needed

I evaluated several cue forms, including natural sentences, single keywords, and repeated tokens. Natural cues and keyword cues produced nearly identical early layer divergence values around zero point one. Repeating the same token weakened the effect. This suggests that the monitoring signal is encoded as a simple, linearly separable direction in the embedding space and does not rely on grammatical structure or prompt length.

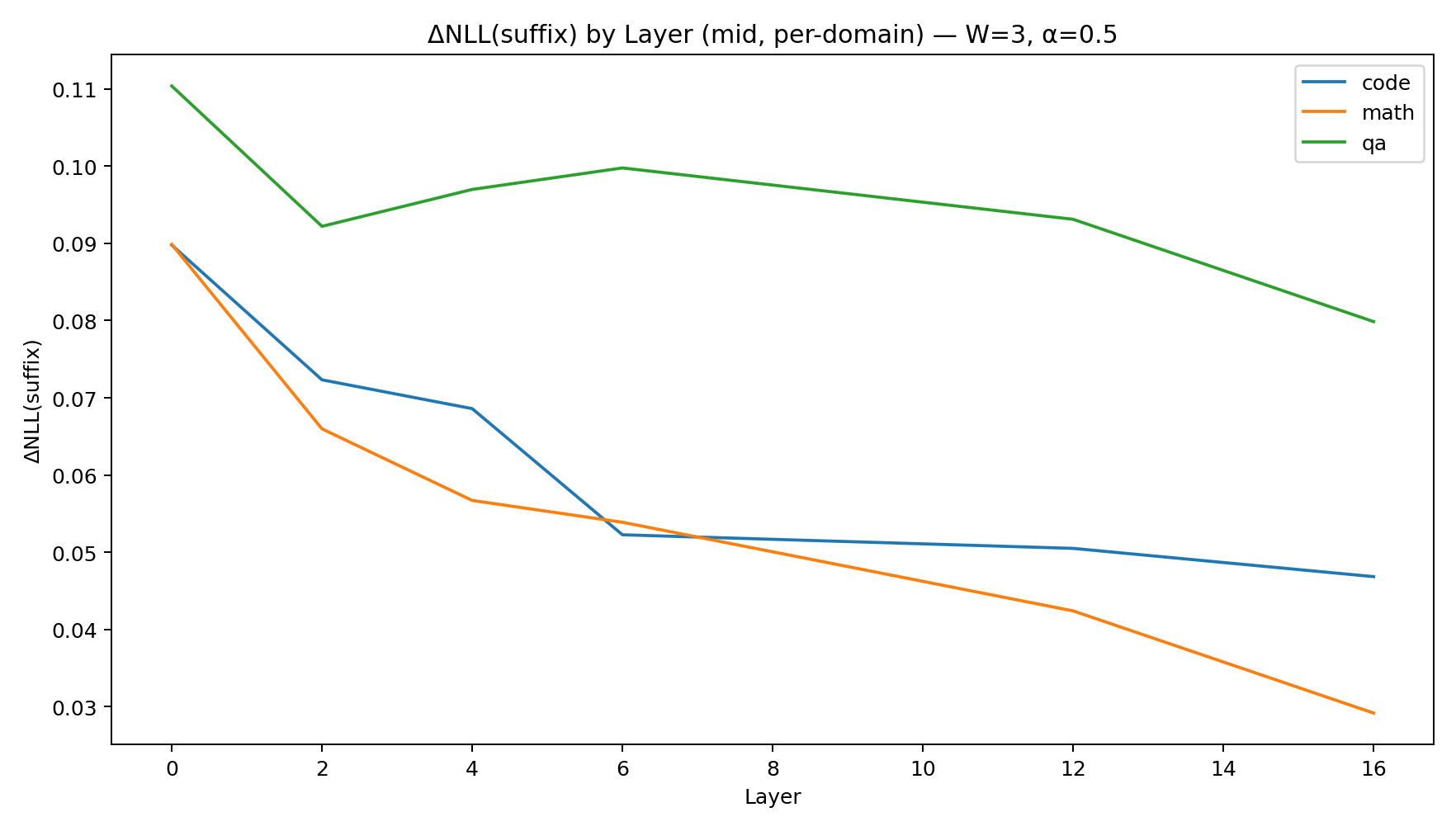

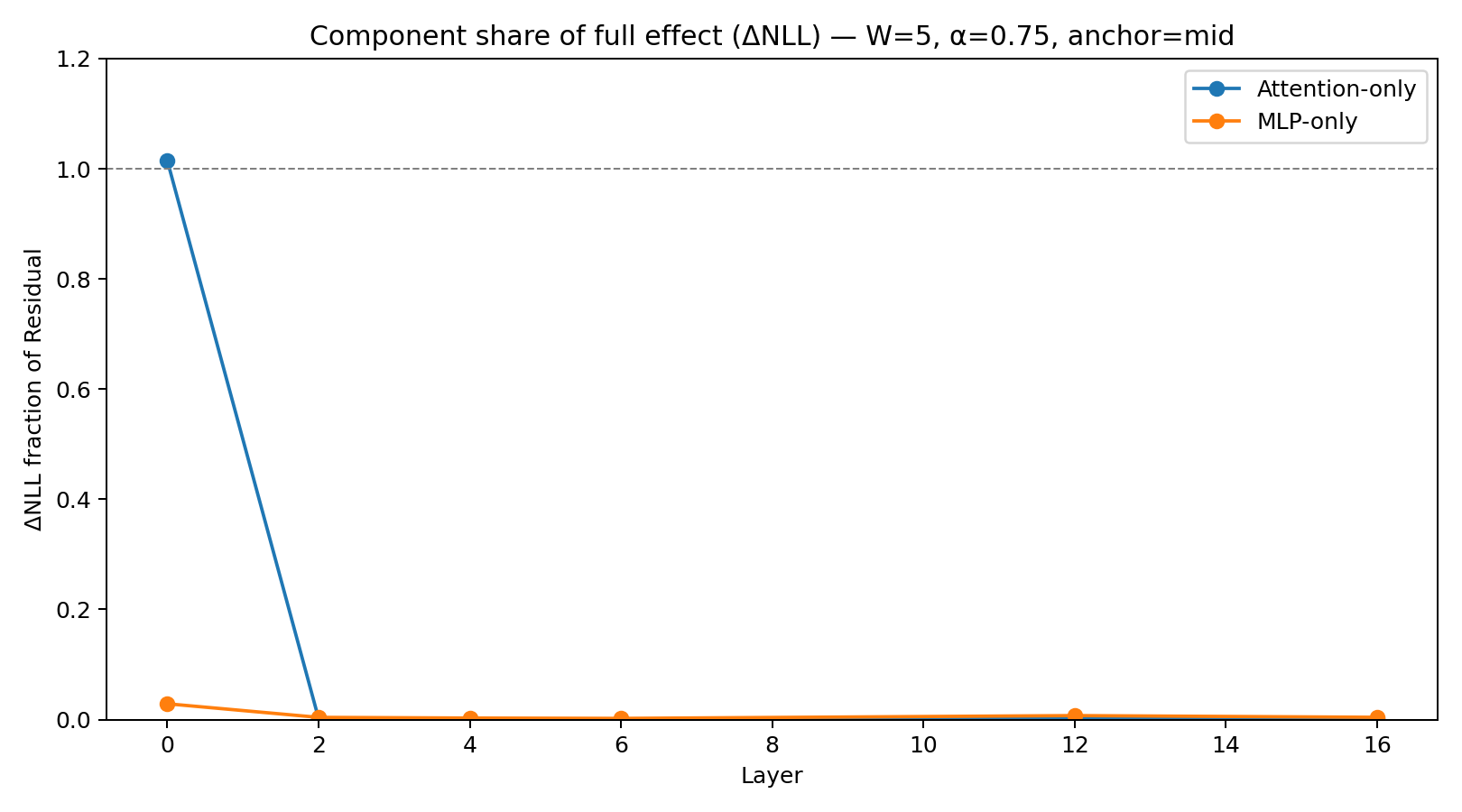

Experiment 3: Causal Patching

To test whether the observed activation changes were causally relevant, I patched early layer activations from monitored runs into hidden runs. Early patches consistently increased predictive alignment, with the largest delta NLL in layers zero to six. Late layer patches had almost no measurable influence. KL divergence analyses showed the same pattern, with sharp, localized shifts only near the patched token positions.

Experiment 4: Component Attribution

I isolated attention and MLP components to test which subparts carry the cue. Attention only patches reproduced almost the entire early layer effect. MLP only patches showed negligible changes. This identifies early attention pathways as the primary carrier of the monitoring signal as it propagates through the computation.

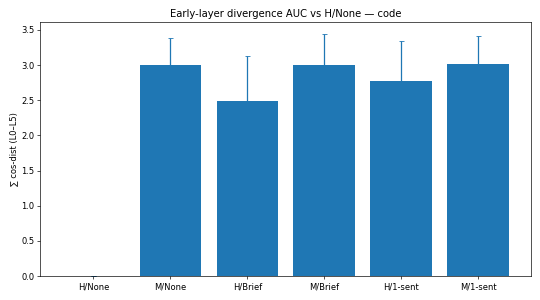

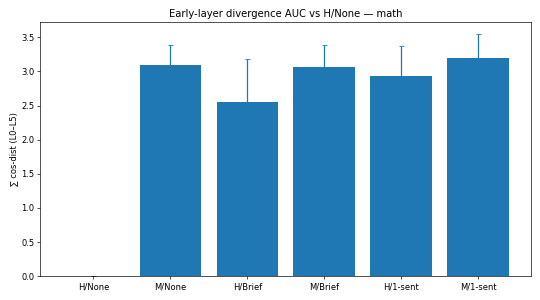

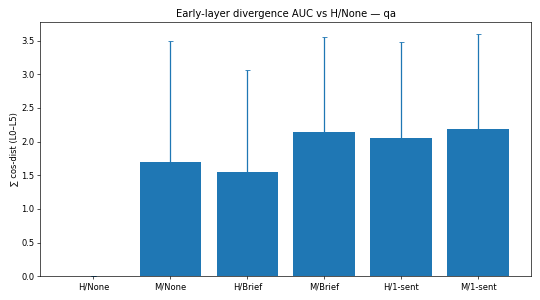

Experiment 5: Monitoring and Concision

I tested whether brevity constraints interact with the monitoring effect by combining monitored or hidden prompts with brief reasoning instructions and single sentence constraints. Clarity and concision scores did not change across conditions. Early layer divergence remained stable and increased only with monitoring, not with brevity. This suggests that concision and monitoring operate through distinct mechanisms.

Conclusion

The experiments provide strong evidence that monitoring signals change internal computation early in the forward pass. The effect is consistent across tasks, robust across cue styles, and causally active only in early layers. Attention mechanisms encode most of the signal, and the divergence begins as soon as the cue is processed.

These findings suggest that monitoring is not only a behavioral instruction but also an internal state switch that reorganizes early reasoning pathways. Understanding this effect could be important for oversight design, chain of thought reliability, and future interpretability work that seeks to map meta cognitive states inside language models.